Task Scope Guard

AI Task Scope Guard is a conceptual feature designed to help teams recognize scope expansion early and respond intentionally—without blocking work, assigning blame, or over-automating decisions.

Project background

Product manager

Defined business goals and success metrics

Aligned stakeholders and prioritized features

UX Researcher

Planned and conducted user interviews

Synthesized behavioral insights

UX Designer

Led interaction design and prototyping

Translated research insights into flows and UI

Product manager

Managed timeline, dependencies, and delivery

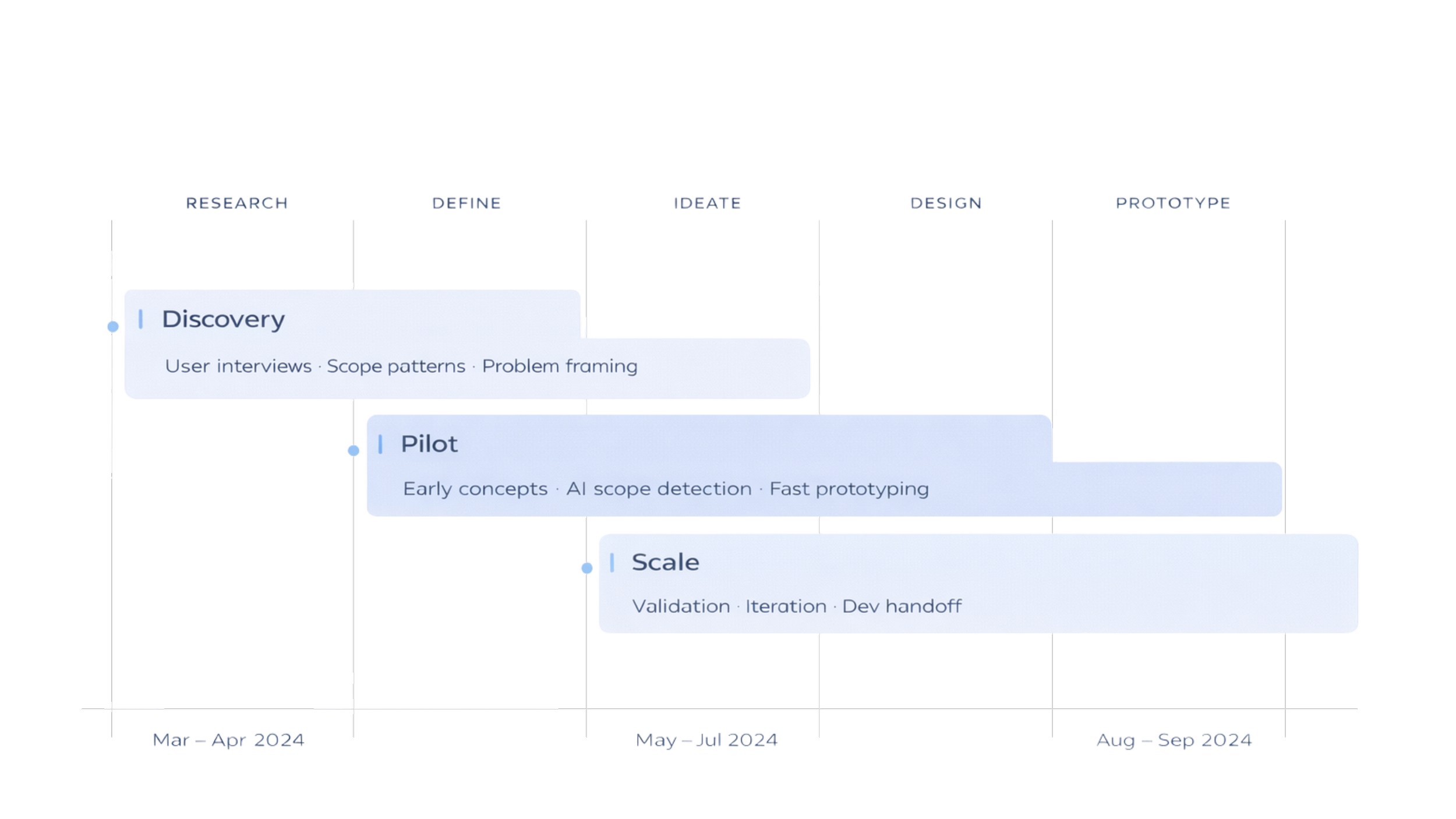

Timeline: March 2024 – September 2024

The problem

Lack of visibility into informal scope changes led to missed deadlines and burnout.

From a business perspective, teams consistently struggled with delivery predictability. Tasks often started with reasonable estimates, yet deadlines were missed as work quietly expanded over time. Scope changes were introduced informally through comments, chats, and meetings, leaving extra effort unacknowledged and difficult to track. As a result, teams experienced burnout, and managers lacked clear evidence to explain delays or realign expectations with leadership.

Early Thinking (Initial Direction)

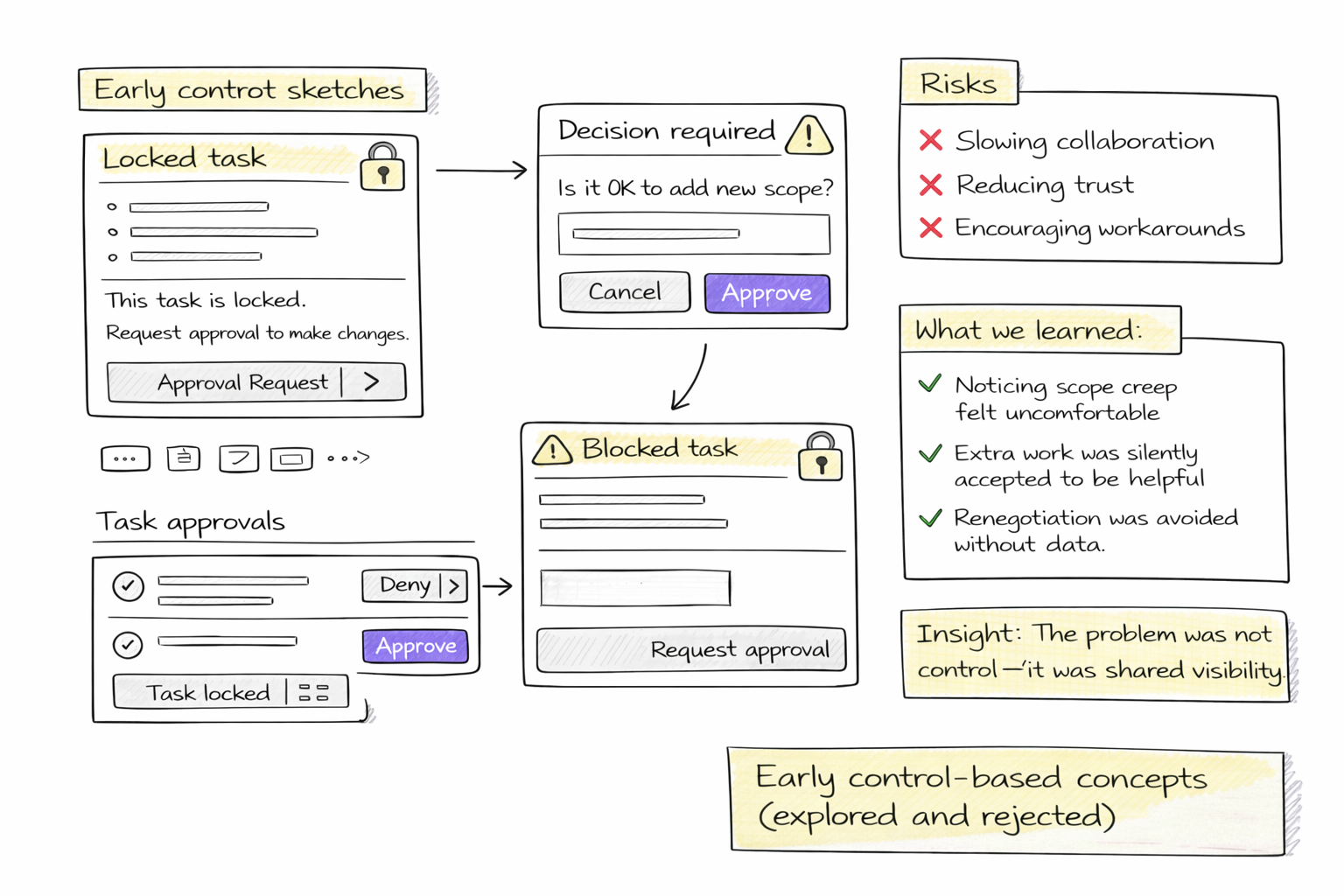

At the beginning of the project, early ideas naturally leaned toward control-based solutions. From a business perspective, the instinct was to prevent scope creep by tightening the system itself—adding stricter approvals, locking task definitions once work began, and relying on automated enforcement rules to limit changes.

However, as we explored these ideas further, it became clear that they introduced new risks. Heavy controls slowed collaboration, reduced trust between teammates, and often pushed scope changes into unofficial channels. Instead of increasing transparency, these approaches encouraged workarounds and made the real problem harder to surface.

The problem was not control—it was the absence of a shared, neutral way to make scope changes visible and discussable.

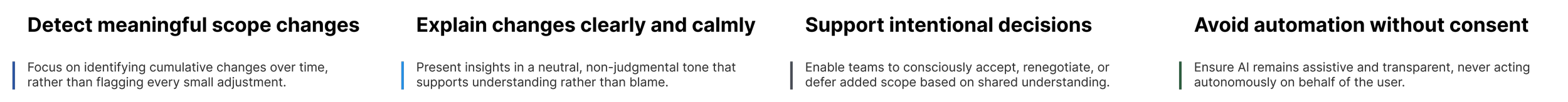

Product Goals

Make scope changes visible and explainable

Support healthy renegotiation

Reduce silent overwork

Preserve human decision-making

Visioned Future

AI acts as a quiet observer:

Capturing context over time

Surfacing insights when they matter

Supporting conversations—not enforcing outcomes

What We Knew at the Start

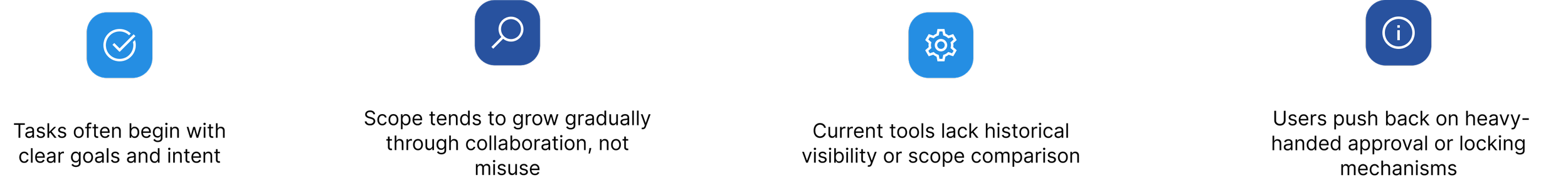

At the beginning of the project, both business and delivery teams shared a common understanding of how work typically evolved. Tasks usually started with a clear intent and reasonable estimates, but over time, collaboration introduced small additions that gradually changed the scope. Existing tools captured the current state of work, yet offered no way to compare how a task had evolved from its original definition. Importantly, users expressed strong resistance to rigid controls or enforcement mechanisms that could undermine trust and collaboration.

Key assumptions validated early

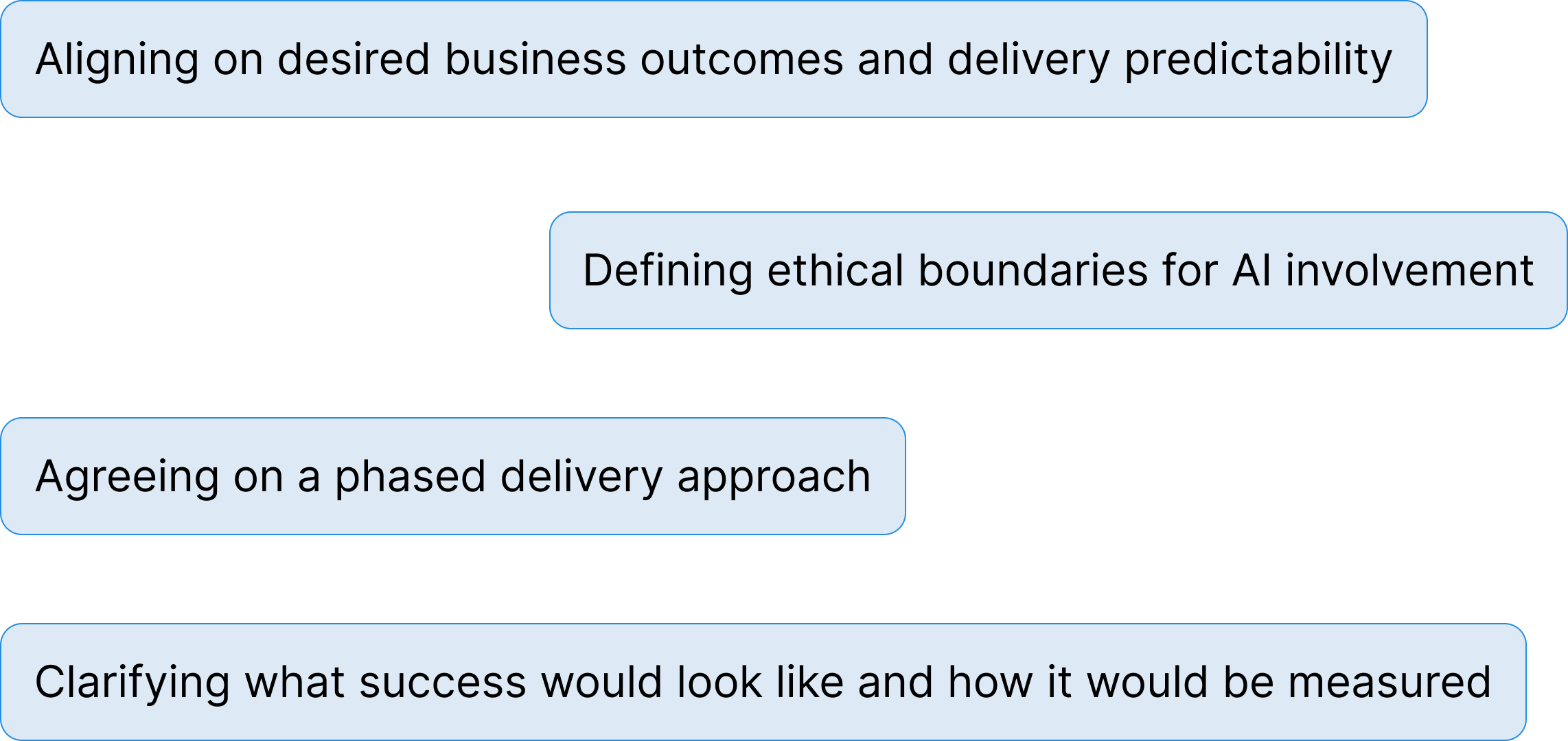

Stakeholder Alignment

Early stakeholder sessions were critical in setting the foundation for the product. Rather than jumping into features, we aligned on outcomes, boundaries, and expectations. A strong emphasis was placed on ensuring that AI would remain supportive and transparent—never punitive or autonomous in decision-making. Stakeholders also agreed on delivering the product in phases, allowing insights and feedback to shape the solution over time.

Research Insights That Shaped the Design

Through interviews, focus groups, and task walk-throughs, several consistent behavioral patterns emerged. These insights shifted the team away from control-heavy solutions toward visibility-driven design.

Scope creep is usually visible in hindsight

Participants rarely noticed scope expansion while it was happening. Instead, it became clear only after deadlines slipped or workloads increased, highlighting the need for historical comparison rather than real-time enforcement.

People avoid renegotiation without evidence

While users were often aware that work had grown beyond its original intent, they felt uncomfortable raising concerns without concrete data. This led to silent acceptance of extra work rather than open discussion.

Visual comparison works better than metrics

Users struggled to interpret abstract numbers or percentages. Simple before-and-after views of task scope were far more effective in helping them recognize change and understand impact.

Participants expressed higher trust in AI when insights were accompanied by clear reasoning and context, rather than opaque recommendations or alerts.

AI is trusted more when it explains why

Alerts create anxiety; reflection builds trust

Interruptive notifications were often perceived as stressful or punitive. In contrast, reflective prompts embedded in task views encouraged thoughtful decision-making without pressure.

Defined Goals

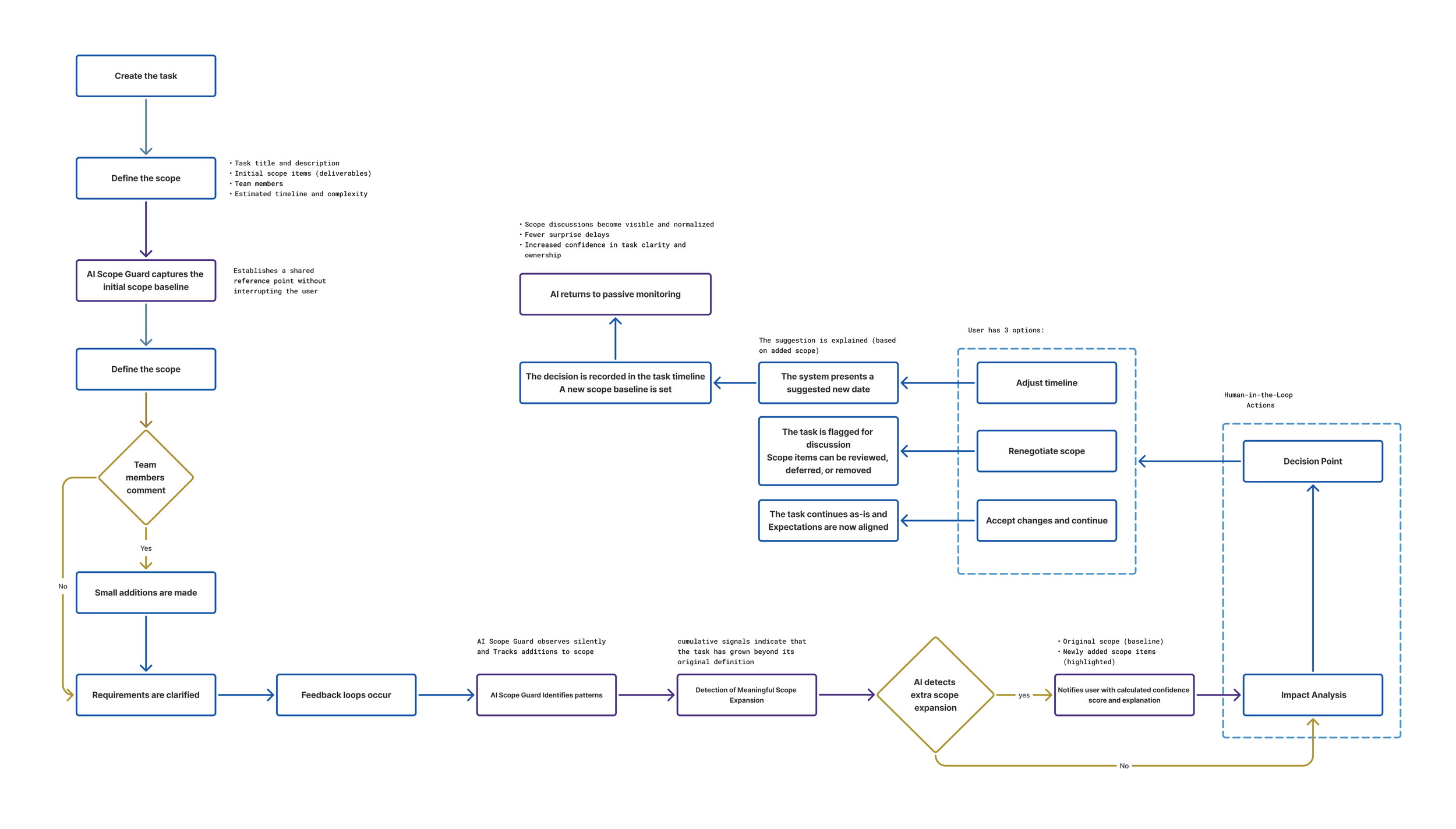

Solution and user flow

This solution uses AI to quietly track how tasks evolve over time and surface meaningful scope changes only when they matter. By visually comparing the original task intent with the current scope and clearly explaining the impact, it helps teams recognize when work has grown without creating friction or pressure. Decisions such as adjusting timelines, renegotiating scope, or accepting changes remain fully human-led, ensuring transparency and shared ownership while reducing silent overwork and unexpected delays.

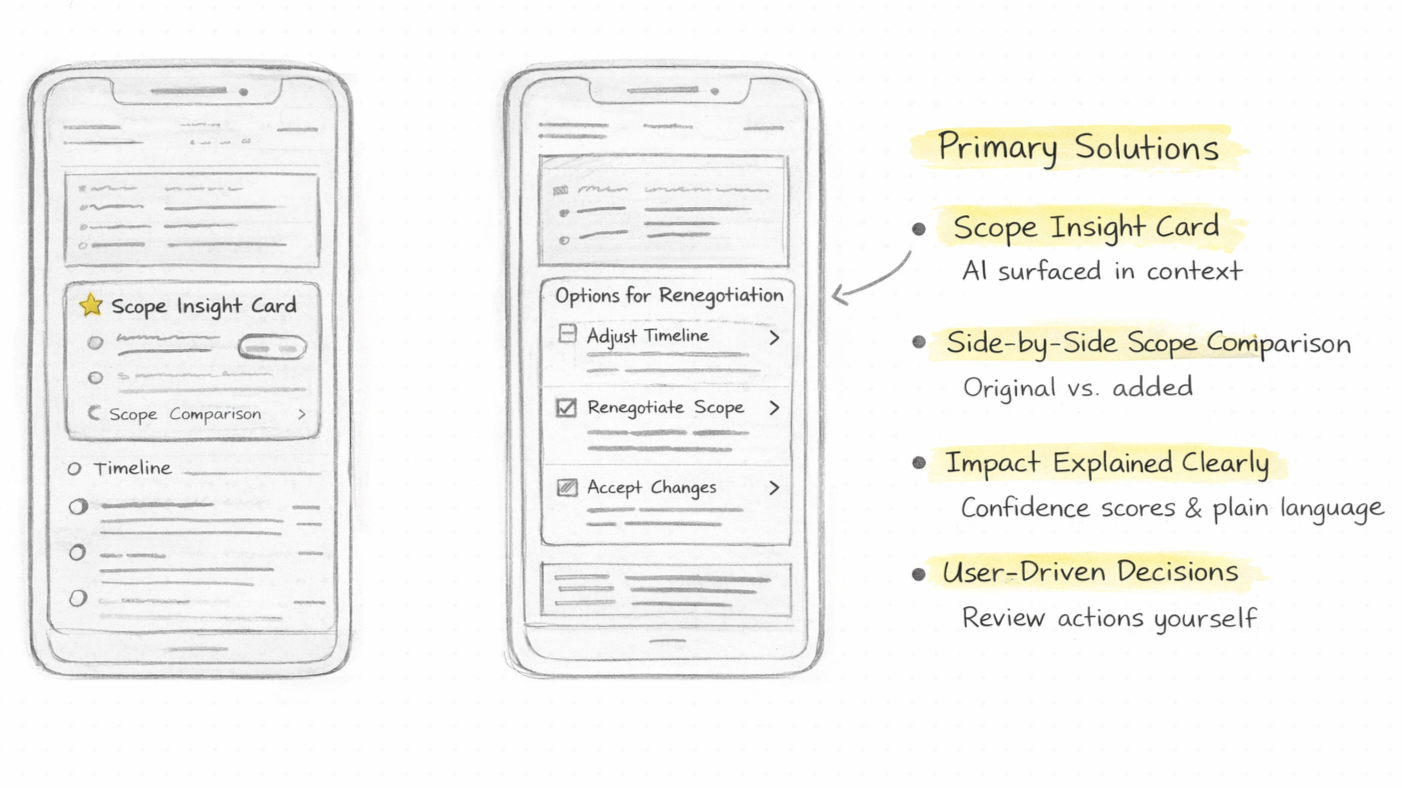

In initial sketches instead of adding approvals or restrictions, the concept focuses on embedding a lightweight scope insight directly within the task view, allowing users to see how the original intent has evolved over time. By visually comparing what was initially defined with what has been added through collaboration, the design helps teams recognize scope growth in context rather than in hindsight. The second sketch introduces clear, human-led response options—such as adjusting timelines, renegotiating scope, or intentionally accepting the changes—reinforcing the idea that AI should explain and support, not decide. Together, these sketches establish the core direction of the solution: increasing shared awareness and enabling intentional decision-making while preserving trust and autonomy.

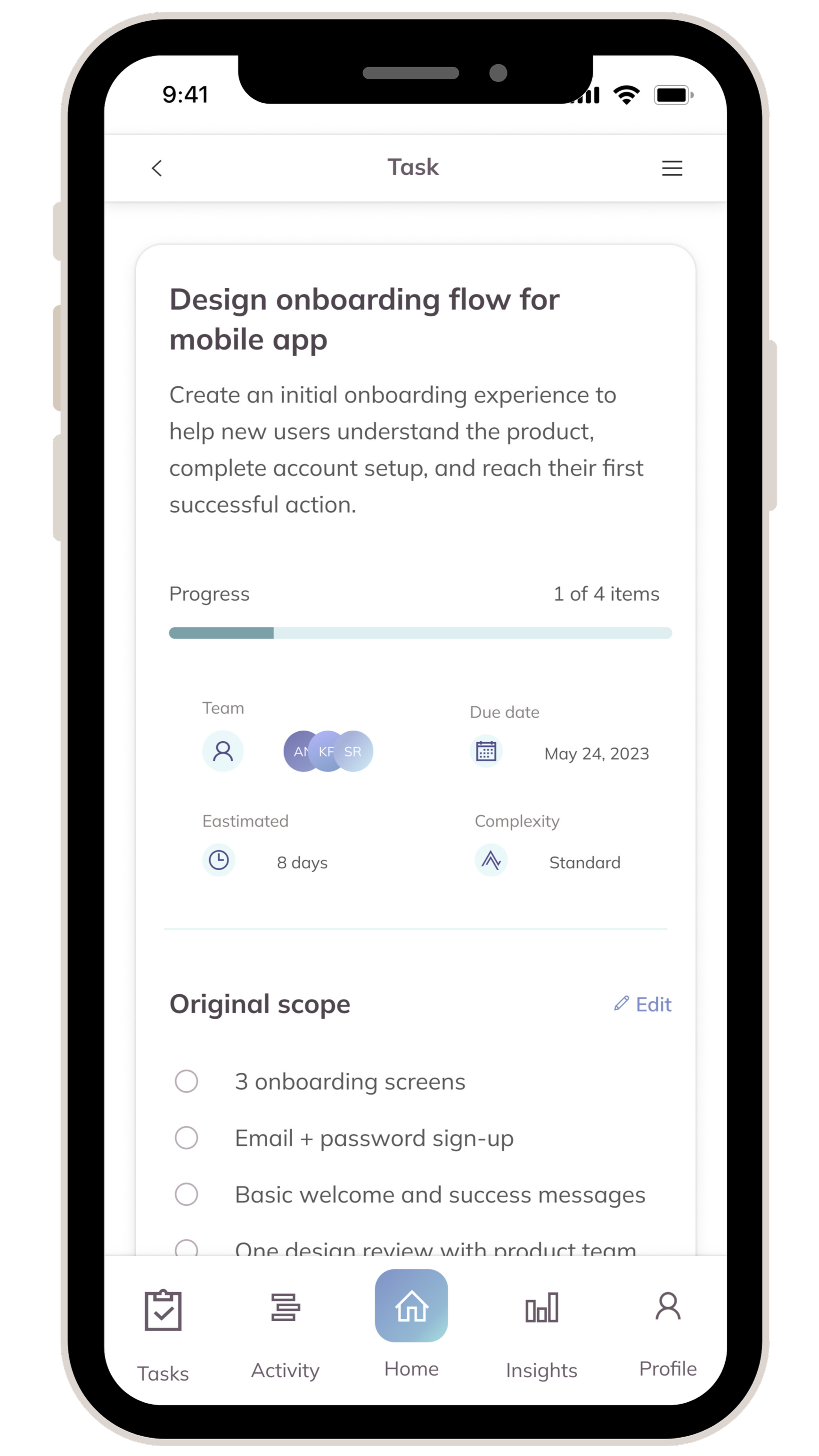

Solution 1: Making Scope Visible, Traceable, and Easy to Understand

Clear task context

Key details (progress, team, timeline, complexity) are surfaced at the top to help users quickly understand the task at a glance

Explicit original scope

Listing initial scope items sets shared expectations early and creates a reference point for future changes.

Non-intrusive AI introduction

The scope baseline card explains the AI’s role transparently, framing it as observational rather than controlling.

Trust through clarity

The baseline confidence indicator reassures users without exposing technical complexity.

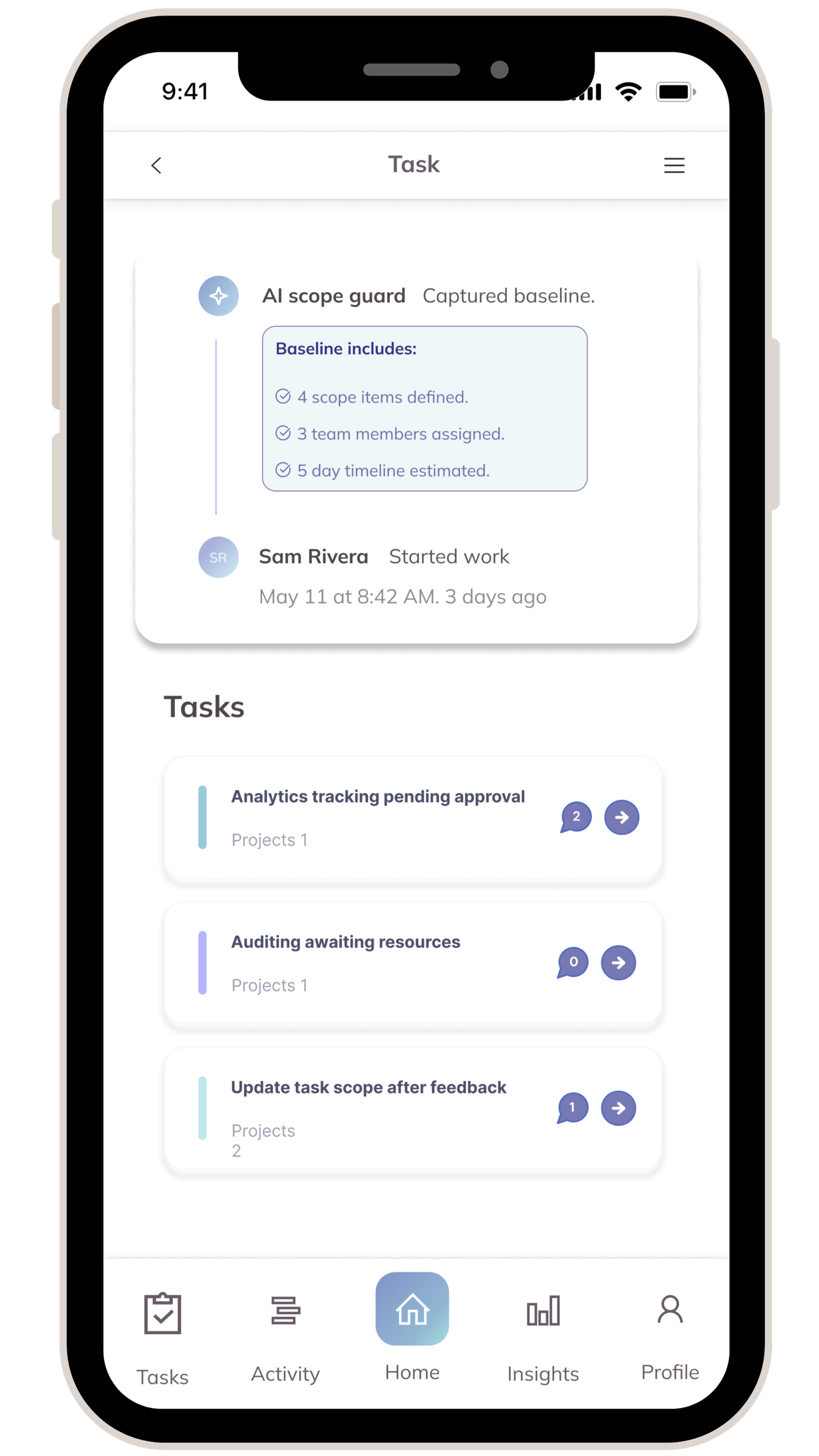

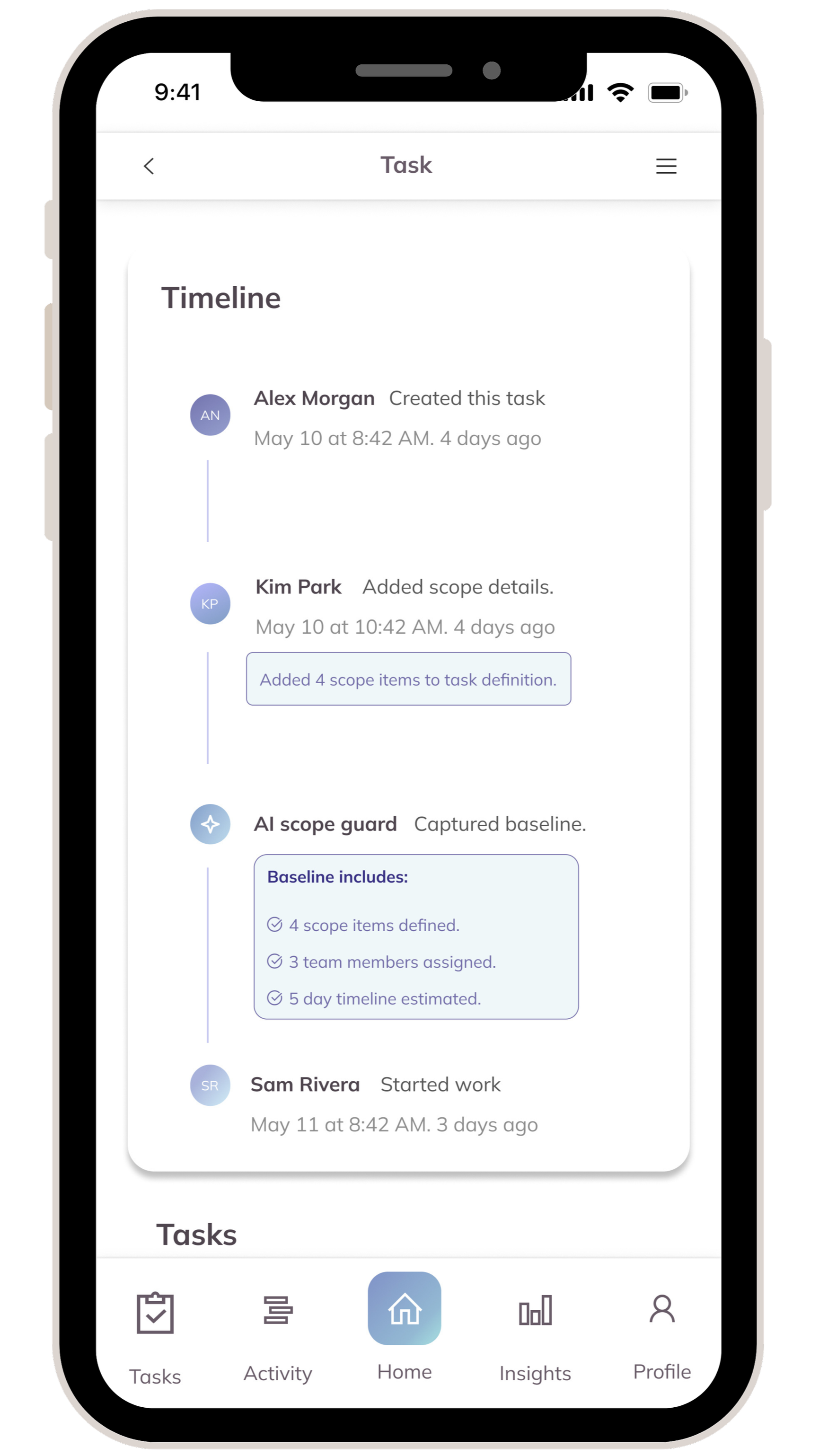

Contextual AI in the timeline

The scope baseline appears as a timeline event, reinforcing that AI insights are part of the task’s natural history rather than a separate system.

Progressive disclosure

The baseline summary shows only essential information (scope items, team size, timeline) to avoid cognitive overload on mobile.

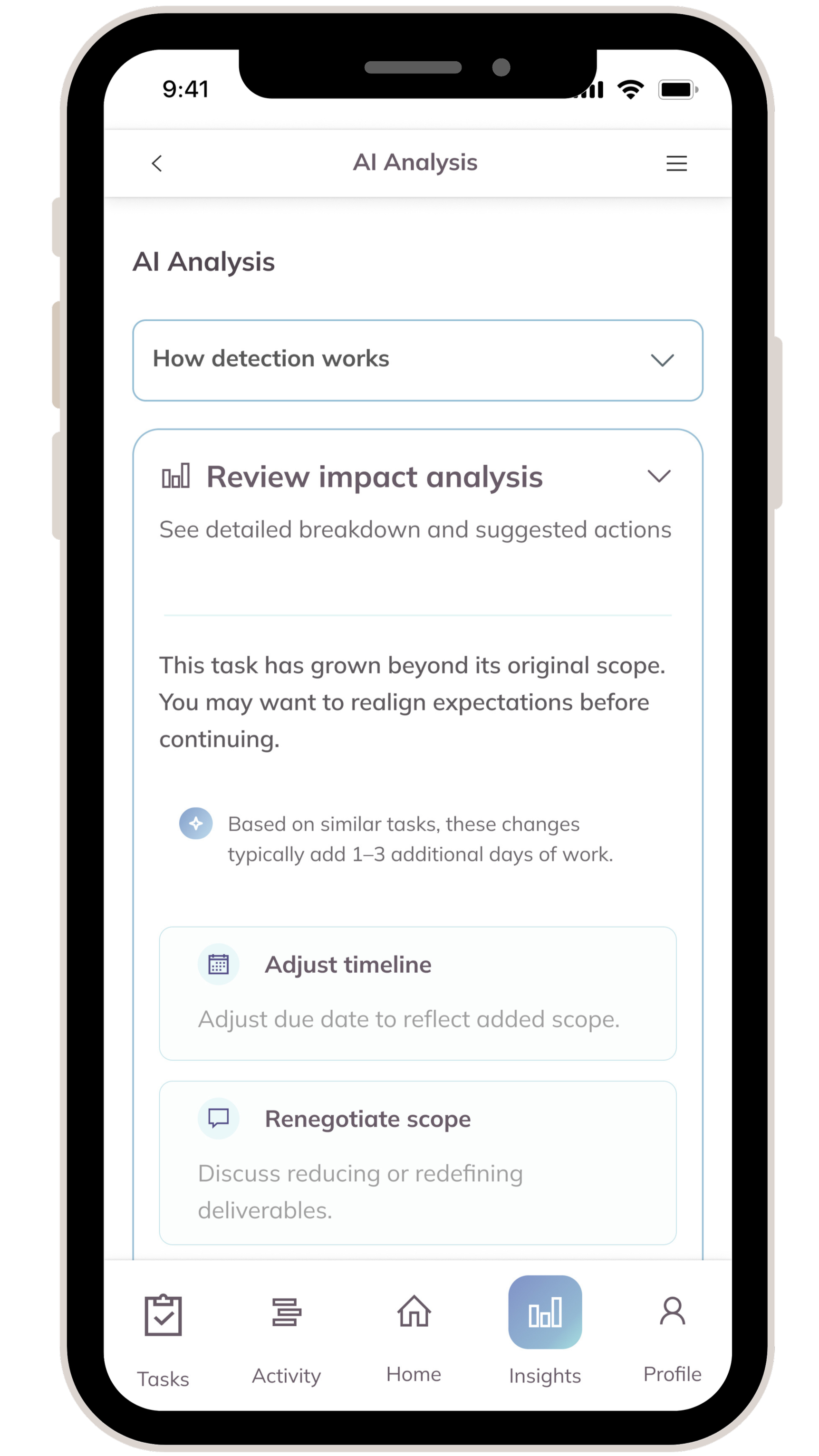

Solution 2: Supporting Intentional Timeline Adjustment

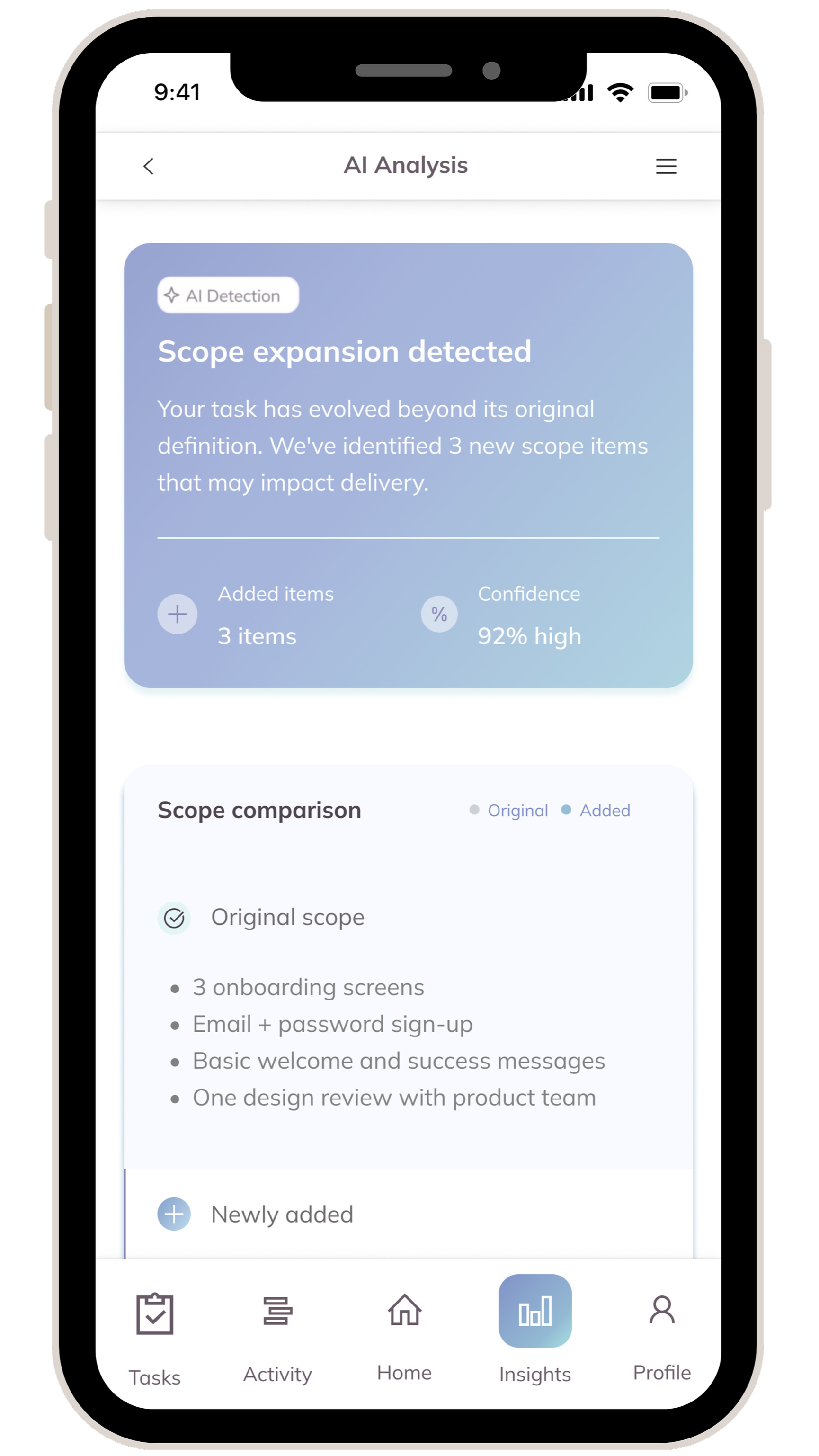

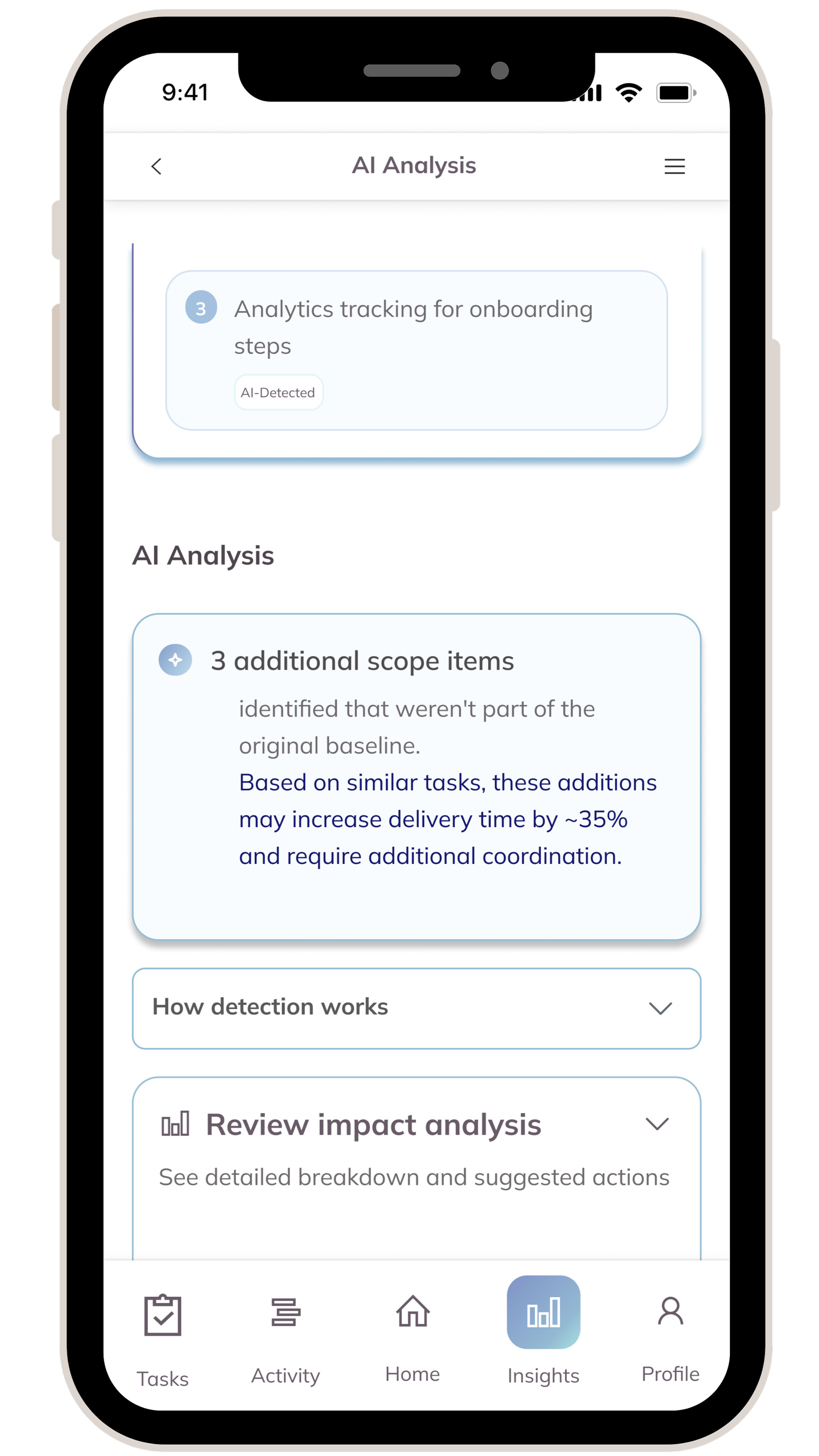

Clear, calm detection messaging

Instead of alarming language, the “Scope expansion detected” card explains what changed and why it matters, helping users understand the situation without feeling blamed or pressured.

Confidence transparency

Showing AI confidence (e.g., 92%) builds trust by being explicit about certainty, while avoiding technical complexity.

Actionable next steps

Presents clear options (adjust timeline, renegotiate scope) to help users move forward without guesswork.

Human-led decision support

AI provides guidance and estimates, but the final decision always stays with the user.

Impact framed as guidance, not judgment

Delivery impact is presented as a potential outcome (“may increase delivery time”) rather than a hard prediction, supporting informed decision-making.

Human-first decision flow

By deferring actions to a follow-up step, this screen focuses on understanding first, aligning with research showing users resist immediate enforcement.

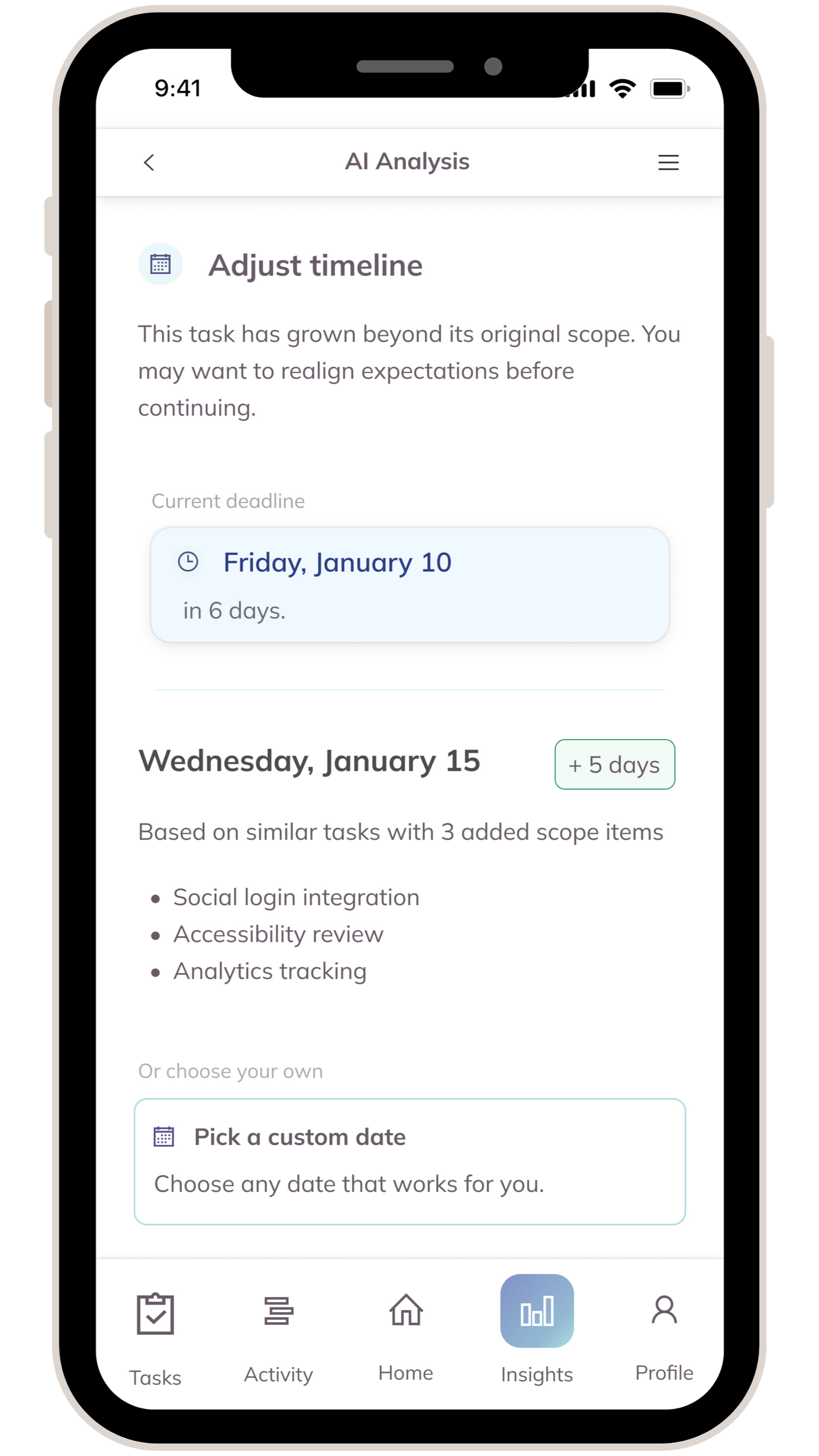

Solution 3: Making Scope Growth Actionable Through Time Ownership

Contextual reassurance before action

Clearly explains why the timeline needs adjustment, reducing anxiety and defensiveness.

AI-supported recommendation

Suggested new date is grounded in similar tasks, while leaving the final decision to the user.

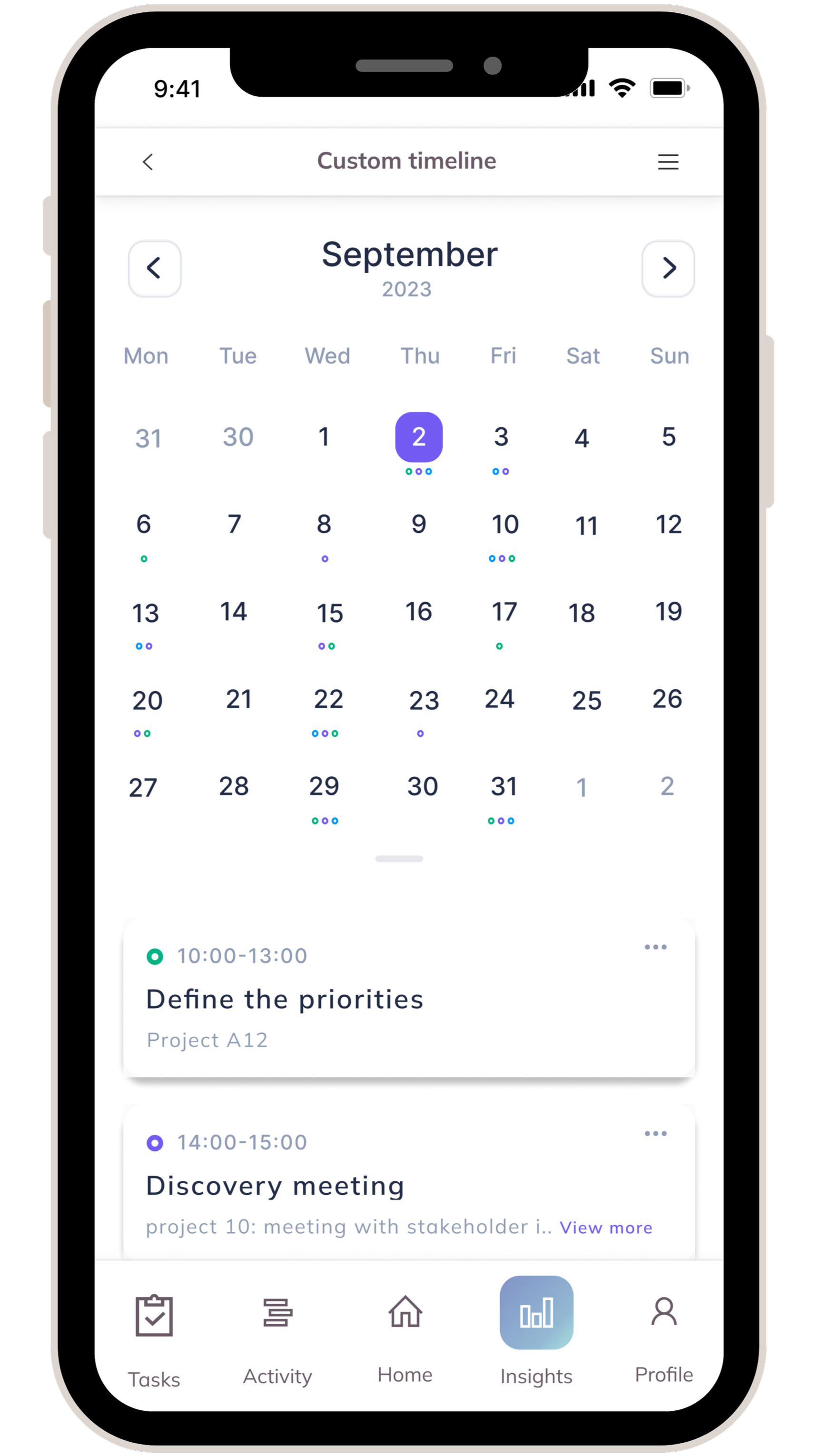

Visual time re-planning

A calendar view helps users mentally map changes against existing commitments.

Preserves task continuity

Existing tasks remain visible, reinforcing that scope growth affects real schedules.

Structured scope acknowledgment

Adding time explicitly forces new work to be named, scheduled, and owned.

Lightweight task creation

Minimal fields reduce friction while still capturing intent and accountability.